SVM Classification |

C-Support Vector Classification

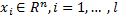

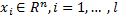

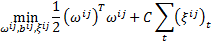

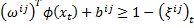

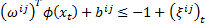

Given training vectors  in two classes, and an indicator vector

in two classes, and an indicator vector  such that

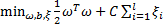

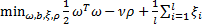

such that  C-SVC (B. E. Boser, I. Guyon, and V. Vapnik. A training algorithm for optimal margin classifiers. In Proceedings of the Fifth Annual

Workshop on Computational Learning Theory, pages 144–152. ACM Press, 1992.; C. Cortes and V. Vapnik. Support-vector network. Machine

Learning, 20:273–297, 1995) solves the following primal optimization problem.

C-SVC (B. E. Boser, I. Guyon, and V. Vapnik. A training algorithm for optimal margin classifiers. In Proceedings of the Fifth Annual

Workshop on Computational Learning Theory, pages 144–152. ACM Press, 1992.; C. Cortes and V. Vapnik. Support-vector network. Machine

Learning, 20:273–297, 1995) solves the following primal optimization problem.

,

,

subject to  ,

,

,

,

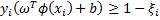

where  maps

maps

into a higher-dimensional space and

into a higher-dimensional space and  is the regularization parameter.

is the regularization parameter.

ν-Support Vector Classification

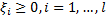

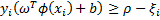

The ν-support vector classification (B. Schölkopf, A. Smola, R. C. Williamson, and P. L. Bartlett. New support vector algorithms. Neural Computation,

12:1207–1245, 2000.) introduces a new parameter

.

It is proved that ν an upper bound on the fraction of training errors and a lower bound of the fraction of support vectors.

.

It is proved that ν an upper bound on the fraction of training errors and a lower bound of the fraction of support vectors.

Given training vectors

in two classes, and a vector

in two classes, and a vector

such that

such that

,

the primal optimization problem is

,

the primal optimization problem is

subject to  ,

,

,

,

.

.

Multi-class classification

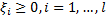

Library implements the “one-against-one” approach (S. Knerr, L. Personnaz, and G. Dreyfus. Single-layer learning revisited: a stepwise procedure for building and training a neural network. In J. Fogelman, editor, Neurocomputing: Algorithms, Architectures and Applications. Springer-Verlag, 1990) for multiclass classification. If k is the number of classes, then k(k − 1)/2 classifiers are constructed and each one trains data from two classes. For training data from the i-th and j-th classes the following two-class classification problem is solved.

,

,

subject to  , if

, if  belongs to the i-th class,

belongs to the i-th class,

, if

, if  belongs to the j-th class,

belongs to the j-th class,

.

.

In classification we use a voting strategy: each binary classification is considered to be a voting where votes can be cast for all data points x - in the end a point is designated to be in a class with the maximum number of votes.

In case that two classes have identical votes, though it may not be a good strategy, now we simply choose the class appearing first in the array of storing class names.

SVMClassification class contains SVMClassificationTypeClassifier enumeration which is used to set C- or ν-Support Type. The following methods are featured in the class:

Method | Description | Performance |

|---|---|---|

train | The IBaseClassifier interface function. Train TrainCSVC classifier by default. | |

train C-support | Train C - Support Vector Classifier. | |

train ν-support | Train ν - Support Vector Classifier | |

classify | Classifies observation vector into one of the classes. |

The example of SVM Classification usage:

1public static void ClassificationTest() 2{ 3 Console.WriteLine("********************************************************************"); 4 Console.WriteLine("ClassificationTest"); 5 Console.WriteLine("********************************************************************"); 6 7 8 /*******************************/ 9 /* Train model */ 10 /*******************************/ 11 Matrix obs = new Matrix(10, 3, 0); 12 IntegerArray cl = new IntegerArray(obs.Rows); 13 Double d = 0; 14 for (Int32 r = 0; r < obs.Rows; ++r) 15 { 16 for (Int32 c = 0; c < obs.Columns; ++c) 17 { 18 obs[r, c] = (d % 15) + 1; 19 d++; 20 } 21 cl[r] = (r % 5) + 1; 22 } 23 24 SVMClassification model = new SVMClassification(); 25 model.TrainCSVC(obs, cl, kernel: new FMSVM.KernelLinear()); 26 27 /*******************************/ 28 /* Save/Load model */ 29 /*******************************/ 30 model.Save("svm_csvc"); 31 32 model.Clear(); 33 34 model.Load("svm_csvc"); 35 36 /*******************************/ 37 /* Classify observations */ 38 /*******************************/ 39 Console.WriteLine("Classification example:"); 40 for (int ri = 0; ri < obs.Rows; ++ri) 41 Console.WriteLine($"Real class {cl[ri]}, SVM classification {model.Classify(obs.GetRow(ri))}"); 42 43 /*******************************/ 44 /* Get information */ 45 /*******************************/ 46 Console.WriteLine($"SVM type: {model.Type}; Kernel {model.Kernel};"); 47 48 Console.WriteLine($"SVM classes list: {model.Classes}"); 49}