Least Squares Mutual Information (LSMI) |

Mutual Information coefficient covers all kinds of statistical dependencies including nonlinear ones. Mutual Information of two random variables equals zero if and only if they are completely statistical independent.

Use density ratio approximation to approximate alternative version of mutual information between two scalar series. Use Cross Validation to select model parameters.

This topic contains the following sections:

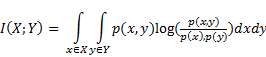

Mutual information of two continuous random variables X, Y can be defined as:

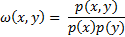

As the calculation of continuous MI by definition on limited statistical data set is impossible due to necessity of knowing exact probability distribution functions, we have to use approximations. The approximation of mutual information between two data series is calculated using Least Squares Mutual Information method. LSMI receives two data series and allows to approximate mutual information between them. The method does not involve density estimation and directly models the density ratio:

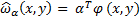

by the following linear model:

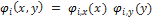

where α = (α1, α2, . . . , αb)⊤ are parameters to be learned from samples, and φ(x, y) = (φ1(x, y), φ2(x, y), . . . , φb(x, y))⊤ are basis functions such that φ(x, y) ≥ 0b for all (x, y). 0b denotes the b-dimensional vector with all zeros. b is the basis size of the model. The basis functions φ(x, y):

The steps of the algorithm are the following (the input parameters of constructors are marked with bold font):

Algorithm randomly chooses basisSize number of centers {cl | cl = (u⊤l, v⊤l)⊤}bl=1 from the input data.

Depending on the input series we select one of the cases:

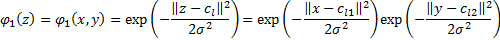

In case both series are continuous (useDelta flag should be false): as model candidates, we propose using a Gaussian kernel model:

where sigma will be optimally chosen from the candidates list sigmaList.

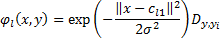

In case of one series (we consider it is the second one) is discrete φi,y should be a Kronecker delta (useDelta flag should be true): Dy,yi:

If both series are discrete, following approximation doesn’t make any sense, cause in this case mutual information can be found from formula:

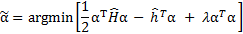

In order to estimate model parameter - vector α, we need to maximize Likelihood function under constraint αi > 0, we obtain the following optimization problem:

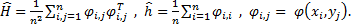

where lambda is Lagrange multiplier and

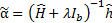

Differentiating the objective function with respect to α and equating it to zero, we can obtain an analytic-form solution:

where Ib is the b-dimensional identity matrix; and the value of lambda will be chosen optimally from the candidates list lambdaList. It will allow us to find solution very fast using Least Squares instead of complex constrained numerical optimization.

Algorithm splits all data into basketCount baskets and performs Cross Validation procedure: estimates model parameters on data set with one basket excluded and validates solution fitness on the excluded basket. Model with best CV score will be chosen to calculate final MI value.

Least Squares Mutual Information method is implemented by the LSMI class.

The following constructors create an instance of the class and calculate Mutual Information between series X and Y:

Constructor | Description | Performance |

|---|---|---|

set series and other parameters | Calculate Mutual Information between series X and Y, set list of possible values for Lagrange multiplier and Gaussian standard deviation, whether Kronecker Delta function should be used, basket count and number of basis function to approximate density ratio. lambdaList - candidates list from which value of lambda will be chosen optimally; sigmaList - candidates list from which value of sigma will be chosen optimally; useDelta - flag which should be set to true if the second series is discrete; basketCount - number of baskets for cross validation procedure; basisSize - number of basis functions for the model. | |

set series and use default parameters | Calculate Mutual Information between series X and Y. Default values: lambdaList = vector of 9 logarithmically spaced points between 10 and 109 sigmaList = vector of 9 logarithmically spaced points between 10-2 and 102 useDelta = false basketCount = 5 basisSize = min(200, input vectors length) |

The class provides two methods:

Method | Description | Performance |

|---|---|---|

out sample validate | Estimate goodness of model fitness on new samples. | |

log space generate | The logspace function generates logarithmically spaced vectors. Especially useful for creating frequency vectors. Generates n points between decades 10a and 10b. |

The class provides one property MutualInformation which is the approximation of Mutual Information between two series.