Stepwise Least Squares (Stepwise LS) |

This topic contains the following sections:

The Stepwise LS is data-driven algorithmic modification of the OLS model. Stepwise LS is intended for cases when there are a lot of competing potential explanatory variables-regressors but little or no knowledge for choosing one variable over another. It is not a strong statistical method but rather a variables selection technique, and as such can not guarantee correct statistical inference and good out-of-sample prediction.

Given a (full) set of explanatory variables-regressors to possibly include in a linear regression model, Stepwise LS algorithm incrementally examines the variables for some explanatory power, usually in form of some significance criterion, and then includes or excludes variables based on that criterion. The procedure terminates when no single step can improve the model.

The following algorithm is applied:

For each regressor we perform statistical test. The statistical hypothesis H0 is stated that a particular βi = 0. We have two predefined significance levels: for including a variable (penter) and for removing it (premove). We need to determine which variables have to be included or excluded from the model. The vector of boolean elements which has the same length as the number of variables indicates which variables are included.

On each iteration we calculate the regression parameters bi and test the hypothesis H0 for each variable. For this purpose we calculate the t-statistic of the test:

where s2 - sum of squared residuals,

N = number of observations minus number of in-model regressors (including the currently tested one),

, and X is the matrix of in-model regressors' observations.

, and X is the matrix of in-model regressors' observations.

If H0 is true then t-statistic follows the Student's t-distribution with N true degrees of freedom.

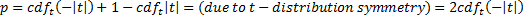

H0 is rejected with a specified significance level if probability that random variable which follows the t-distribution deviates from the mean by at least the calculated t-statistic smaller than significance level (penter or premove depends on whether out-of-model or in-model regressor is tested respectively). The probability of it is calculated as follows:

where cdft is the cumulative distribution function of t-distribution.

For out-of-model variables: choose variable with smallest p-value. If penter > smallest p-value, then this variable is included into the model and we go to first step.

If no variables are included, for in-model variables: choose variable with greatest p-value. If premove < greatest p-value then we remove this variable and go to first step.

If no variables are included or excluded within one iteration step the algorithm stops.

The implementation uses T (Student)-criterion to estimate statistical significance of regression coefficients and includes in the model the variables corresponding to most significant coefficients that meet the enter p-level condition (p-value is less than penter). Similarly, the variables corresponding to the less significant coefficients are excluded from the model if p-values of the coefficients meet exit p-level condition (p-value is more than premove).

For correct logic, penter must be less than premove.

The notable feature of the implementation is that the design matrix should contain only columns corresponding to explanatory variables (has no constant term), and the intercept member of the regression is computed separately.

The Stepwise LS model is implemented by the StepwiseLS class.

To initialize an instance of the class use one of the two constructors provided, either with user defined or default algorithm-specific parameters explained below:

Each of the constructors creates a class instance and runs model fitting for given observations of regressors and regressand.The following table presents input parameters of the first constructor in the order they are used:

Parameter | Description | ||

|---|---|---|---|

Matrix regressors and Vector regressand | Observations of the regressors and the regressand correspondingly. Columns of the design matrix present the set of explanatory variables which are candidates to include in the model (full design matrix), rows of the matrix correspond to observations. | ||

Boolean [] inMask and Boolean [] inKeep | inMask is the array of flags corresponding to explanatory variables and indicating whether a given variable should be initially included in the model (true) or not (false); if the number of variables is not large it is recommended to start with all the set of variables (all true). inKeep is the array of flags indicating whether a given variable should be permanently included in the model (true) or not (false); false means that the variable will be included/excluded according to significance criterion.

The default values of the inMask and inKeep arrays is all false. | ||

Double penter and Double premove | Values between zero and one that present enter and exit p-level correspondingly. The less is the enter p-value, the stronger is significance requirement for variables to include in the model (similarly for the exit p-value in context of chance of excluding from the model);

The default values are:

| ||

Int32 iterationCount | Maximal number of iterations for selection algorithm, normally should be more than the number of explanatory variables. The default value is MaxValue. | ||

Boolean scale | true value forces the algorithm to center and scale regressors (replace values in columns of the design matrix with their z-scores before fitting). The default value is false.

|

The class realizes basic interface and extends it with the following properties:

Property | Description |

|---|---|

Returns the intercept member value. | |

To easily recognize the in-model and out-of-model variables: true elements of the array indicate the in-model variables. | |

Present indices of the in-model variables; each index points at the column of the design matrix. | |

Vector of regression coefficients. For in-model regressors parameters will be regression Parameters. Each out-of-model coefficient is presented as if only the corresponding variable would be added to the model with the already in-model variables. StepwiseParameters depend on the scale flag while initialization:

| |

Presents p-values to all regressors. | |

Presents T-statistics to all regressors. | |

The number of iterations done before algorithm termination. |

The example of Stepwise Least Squares usage:

1using System; 2using FinMath.LinearAlgebra; 3using FinMath.LeastSquares; 4using FinMath.Statistics.Distributions; 5 6namespace FinMath.Samples 7{ 8 class StepwiseLSSample 9 { 10 static void Main() 11 { 12- #region Generate inputs. 13 14 // Input Parameters. 15 const Int32 inSampleObservations = 100; 16 const Int32 outOfSampleObservations = 100; 17 const Int32 regresssorsCount = 10; 18 const Double homoscedasticVariance = 0.1; 19 20 // Here we generate synthetic data according to model: Y = X * Beta + Er. 21 // Allocate real beta vector for model. 22 Vector realBeta = Vector.Random(regresssorsCount); 23 // Randomly remove influence of some regressors to regressand. 24 for (Int32 i = 0; i < regresssorsCount; ++i) 25 realBeta[i] = FMControl.DefaultGenerator.Value.Next(3) == 0 ? 0.0 : realBeta[i]; 26 27 // Allocate in-sample observation of regressors X. 28 Matrix inSampleRegressors = Matrix.Random(inSampleObservations, regresssorsCount); 29 // Allocate out-of-sample observation of regressors X. 30 Matrix outOfSamplesRegressors = Matrix.Random(outOfSampleObservations, regresssorsCount); 31 32 // Calculate in-sample regressand values Y, without errors. 33 Vector inSampleRegressand = inSampleRegressors * realBeta; 34 // Calculate out-of-sample regressand values Y, without errors. 35 Vector outOfSamplesRegressand = outOfSamplesRegressors * realBeta; 36 37 // Add noise. As far as our model is Y = X * Beta + Er. Where Er ~ N(0, v) and v is constant variance. 38 Normal normalDistribution = new Normal(0.0, homoscedasticVariance); 39 // Add errors to in-sample regressand values; 40 inSampleRegressand.PointwiseAddInPlace(normalDistribution.Sample(inSampleObservations)); 41 // Add errors to out-of-sample regressand values; 42 outOfSamplesRegressand.PointwiseAddInPlace(normalDistribution.Sample(outOfSampleObservations)); 43 44 #endregion 45 46 // Compute least squares. 47 StepwiseLS ls = new StepwiseLS(inSampleRegressors, inSampleRegressand); 48 // Get least squares beta. 49 Vector lsBeta = ls.Parameters; 50 51 Console.WriteLine("Parameters:"); 52 Console.WriteLine(" Real parameters (which were used for data generation): "); 53 Console.WriteLine(" " + realBeta.ToString("0.000")); 54 Console.WriteLine(" Parameters calculated by least squares: "); 55 Console.WriteLine(" " + lsBeta.ToString("0.000")); 56 Console.WriteLine(" Indices of in-model regressors: "); 57 Console.WriteLine(" " + ls.InModelIndices); 58 59 Console.WriteLine(""); 60 Console.WriteLine("Least Squares Results:"); 61 Console.WriteLine(" Parameters difference norm is: " + (realBeta - lsBeta).L2Norm()); 62 // Build forecast for out-of-sample regressand values and estimate residuals. 63 Console.WriteLine(" Out-of-sample error is: " + ls.EstimateResiduals(outOfSamplesRegressors, outOfSamplesRegressand).L2Norm()); 64 } 65 } 66}